At the recent Linux Storage, Filesystems, MM, and BPF Summit, Alan Jowett from Microsoft, who also serves on the eBPF Steering Committee, presented an insightful session titled “BPF performance: comparison of eBPF for Windows vs. Linux BPF” (video follows below). This session shed light on the nuances of BPF performance across these two major platforms, emphasizing the need for cross-platform consistency in performance-sensitive applications. Here’s a summary of the key points discussed.

The Need for Cross-Platform Performance

As BPF programs increasingly become cross-platform, developers expect their performance to be consistent, regardless of the underlying operating system. Jowett highlighted that while perfect parity might be challenging, the goal is to achieve performance that is at least comparable between Windows and Linux. This is crucial because BPF programs are often used in scenarios where performance is critical, such as network operations and system monitoring.

Key Performance Factors

One of the primary findings from Jowett’s work is that the cost of helper functions plays a more significant role in BPF program performance than the BPF code itself. The study delved into various performance aspects, including the cost of cycles per byte for network operations and the impact on jitter and latency. Another area of interest was the overhead involved in transitioning from the kernel to the BPF virtual machine, which differs between Windows and Linux due to their unique handling of Read-Copy-Update (RCU) mechanisms.

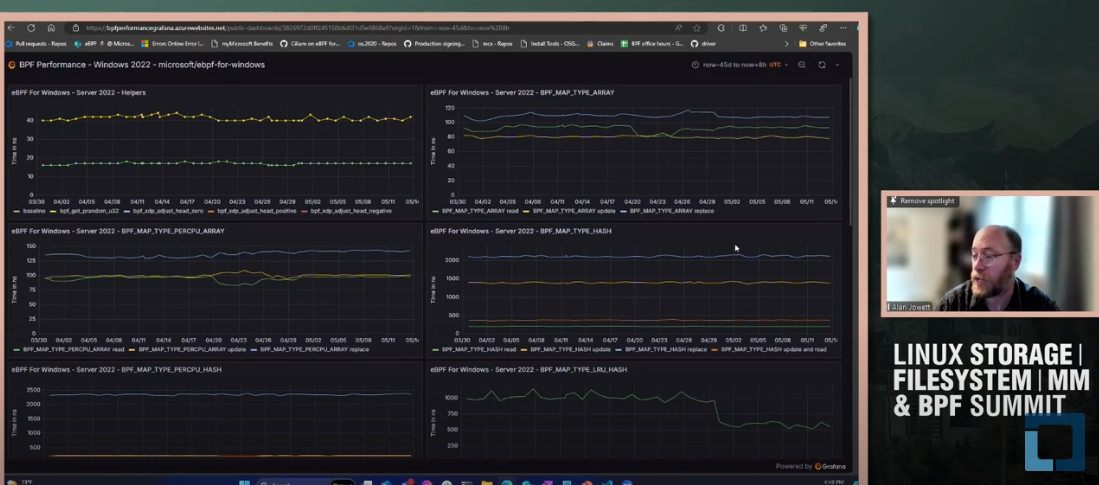

Introducing the BPF Performance Suite

To facilitate a robust comparison, the team developed the BPF Performance Suite. This MIT-licensed project includes a collection of small BPF programs designed to test specific aspects of the BPF runtime, primarily focusing on helper functions. Utilizing the cross-platform libbpf library, the suite can load and execute BPF programs on both Windows and Linux. The tests are run on varying numbers of CPUs to measure the mean invocation times, ensuring comprehensive performance insights.

Test Methodology and Findings

The performance suite conducts a variety of tests, including:

- Baseline Tests: A simple BPF program that returns zero.

- Generic Map Tests: Tests for common helper functions across multiple map types, such as lookup, update, and delete operations.

- Specific Function Tests: Performance of specific helper functions like

bpf_get_prandom_u32andbpf_ktime_get_ns. - Special Case Tests: For instance, LPM (Longest Prefix Match) tests using BGP data to emulate real-world usage.

One notable finding was that Windows exhibited better performance in random number generation by implementing a Mersenne Twister algorithm, avoiding the poor scaling of the Windows kernel’s built-in random number generator. However, in other areas like tail call performance and map-in-map performance, Windows lagged behind Linux. This discrepancy was partly attributed to differences in JIT compilation strategies, where Windows’ JIT compiler did not optimize register saving as efficiently as Linux’s JIT.

Challenges and Future Work

Jowett also discussed the challenges in creating a controlled environment for performance testing. Using GitHub-hosted runners introduced variability and limited control over the Linux kernel versions, affecting the reliability of comparisons. Moving forward, the goal is to establish dedicated VMs for side-by-side comparisons to minimize variability and gain more precise insights into performance differences.